OpenAI’s Sora 2 sparks antisemitism controversy

Sora 2 is surfacing antisemitic AI videos. Here’s what this means for brand safety, content moderation, and AI trust

Sora 2 was supposed to mark a new chapter for OpenAI, a video-generation tool that promised limitless creative possibilities. But less than two weeks into its launch, the platform is already facing backlash for surfacing antisemitic content created by users.

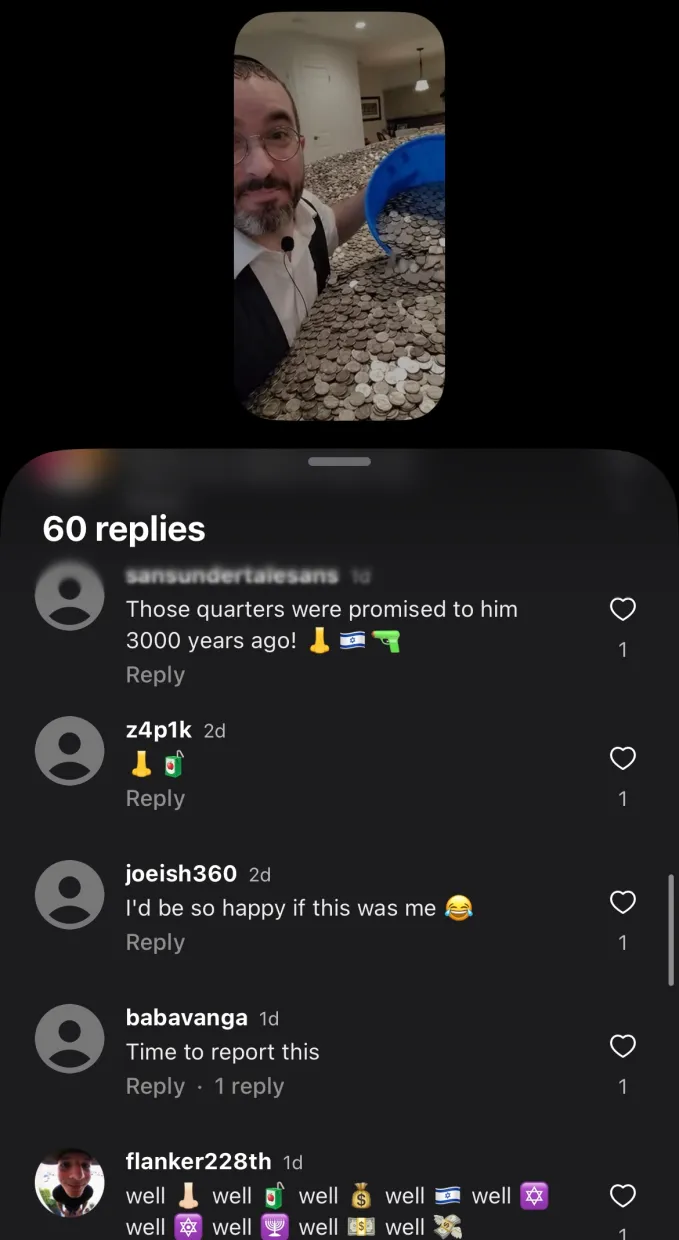

Several videos generated using Sora’s AI tools, including some with stereotypical portrayals of Jewish individuals in money-related scenarios, have gained traction on the platform. One video that depicts a Hasidic Jewish man diving for coins has racked up more than 11,000 likes. Another that features a man in a kippah surrounded by piles of quarters has been remixed into multiple versions, including one using South Park characters.

This article explores what happened, how OpenAI is responding, and what marketers and content leaders should take away from yet another AI content moderation failure.

Short on time?

Here is a table of content for quick access:

What happened on Sora 2?

OpenAI’s Sora 2 launched as an invite-only app on September 30. It allows users to generate videos using text prompts and remix features. But by mid-October, watchdogs including ADWEEK and the Center for Countering Digital Hate (CCDH) had identified antisemitic content gaining popularity in the public feed.

One viral example was based on the prompt “replace her with a rabbi wearing a kippah and the house is full of quarters.” The resulting video showed a man sinking into a room filled with coins, invoking antisemitic tropes. Another video featured football players wearing kippot flipping a coin, followed by a Hasidic character grabbing it and sprinting away.

Thousands of users engaged with the content, remixing and liking the videos. Some comments cheered the stereotypes, while others called them out as offensive. As of publication, these videos were still circulating on the platform.

How OpenAI is responding

OpenAI said Sora 2 includes “multiple layers of safety” meant to prevent harmful content. These include:

- Structured feedback loops

- Prompt filtering and refinement

- Scanning of video frames, captions, and transcripts

- Internal monitoring of trends and emerging risks

But critics are not convinced. CCDH CEO Imran Ahmed called OpenAI’s moderation “not credible,” saying it was predictable that users would create racist content.

The company acknowledged that trying to prevent bias without erasing identities is a difficult balance. Sora’s guardrails were recently adjusted to block content featuring Martin Luther King Jr. after disrespectful prompts went viral. Still, similar interventions have not been made for other troubling videos.

What marketers should know

For marketers using AI tools—or evaluating platforms like Sora for future campaigns—this controversy isn’t just a PR issue. It’s a brand risk moment.

Here are four strategic takeaways to keep in mind as generative video tech goes mainstream:

1. Remixable AI content is a brand safety liability

Sora’s remix function makes it easy for toxic content to spread. It mirrors what happens on platforms like TikTok, but with even less control. Any brand using AI tools to create or distribute content should assume those tools can be misused by others.

2. Platform trust needs to be earned

Public-facing AI platforms are often more reactive than proactive when it comes to moderation. Despite OpenAI’s claims of “red teaming” and internal testing, real users found ways to generate offensive content within days. Trust in platform safety should never be assumed.

3. Realism increases the impact of hate

AI-generated video is more believable than text or images alone. As Emarketer’s Minda Smiley pointed out, realistic depictions can reinforce harmful stereotypes even when viewers know the content is fake. The emotional weight of AI video makes content moderation more urgent.

4. Marketers must stress-test AI tools

It’s no longer enough to test AI tools for output quality or speed. Ethical safety and misuse scenarios should be part of every brand’s due diligence process. If your brand wouldn’t want to be associated with a platform that distributes harmful content, that needs to be reflected in your vendor assessments.

The Sora 2 backlash highlights the limitations of AI guardrails in fast-moving environments. While OpenAI says it is improving its systems, the platform continues to show harmful content, including antisemitism and violence.

For marketers, this is a reminder that content quality is not the only metric that matters. Platform safety, content governance, and audience impact are just as important. AI tools will continue to evolve, but so will the ways they are abused.

Before putting your brand near any emerging platform, make sure their standards align with yours.